Daniil Lisus

Do We Still Need to Work on Odometry for Autonomous Driving?

May 07, 2025Abstract:Over the past decades, a tremendous amount of work has addressed the topic of ego-motion estimation of moving platforms based on various proprioceptive and exteroceptive sensors. At the cost of ever-increasing computational load and sensor complexity, odometry algorithms have reached impressive levels of accuracy with minimal drift in various conditions. In this paper, we question the need for more research on odometry for autonomous driving by assessing the accuracy of one of the simplest algorithms: the direct integration of wheel encoder data and yaw rate measurements from a gyroscope. We denote this algorithm as Odometer-Gyroscope (OG) odometry. This work shows that OG odometry can outperform current state-of-the-art radar-inertial SE(2) odometry for a fraction of the computational cost in most scenarios. For example, the OG odometry is on top of the Boreas leaderboard with a relative translation error of 0.20%, while the second-best method displays an error of 0.26%. Lidar-inertial approaches can provide more accurate estimates, but the computational load is three orders of magnitude higher than the OG odometry. To further the analysis, we have pushed the limits of the OG odometry by purposely violating its fundamental no-slip assumption using data collected during a heavy snowstorm with different driving behaviours. Our conclusion shows that a significant amount of slippage is required to result in non-satisfactory pose estimates from the OG odometry.

DRO: Doppler-Aware Direct Radar Odometry

Apr 29, 2025Abstract:A renaissance in radar-based sensing for mobile robotic applications is underway. Compared to cameras or lidars, millimetre-wave radars have the ability to `see' through thin walls, vegetation, and adversarial weather conditions such as heavy rain, fog, snow, and dust. In this paper, we propose a novel SE(2) odometry approach for spinning frequency-modulated continuous-wave radars. Our method performs scan-to-local-map registration of the incoming radar data in a direct manner using all the radar intensity information without the need for feature or point cloud extraction. The method performs locally continuous trajectory estimation and accounts for both motion and Doppler distortion of the radar scans. If the radar possesses a specific frequency modulation pattern that makes radial Doppler velocities observable, an additional Doppler-based constraint is formulated to improve the velocity estimate and enable odometry in geometrically feature-deprived scenarios (e.g., featureless tunnels). Our method has been validated on over 250km of on-road data sourced from public datasets (Boreas and MulRan) and collected using our automotive platform. With the aid of a gyroscope, it outperforms state-of-the-art methods and achieves an average relative translation error of 0.26% on the Boreas leaderboard. When using data with the appropriate Doppler-enabling frequency modulation pattern, the translation error is reduced to 0.18% in similar environments. We also benchmarked our algorithm using 1.5 hours of data collected with a mobile robot in off-road environments with various levels of structure to demonstrate its versatility. Our real-time implementation is publicly available: https://github.com/utiasASRL/dro.

Balancing Act: Trading Off Doppler Odometry and Map Registration for Efficient Lidar Localization

Mar 03, 2025Abstract:Most autonomous vehicles rely on accurate and efficient localization, which is achieved by comparing live sensor data to a preexisting map, to navigate their environment. Balancing the accuracy of localization with computational efficiency remains a significant challenge, as high-accuracy methods often come with higher computational costs. In this paper, we present two ways of improving lidar localization efficiency and study their impact on performance. First, we integrate a lightweight Doppler-based odometry method into a topometric localization pipeline and compare its performance against an iterative closest point (ICP)-based method. We highlight the trade-offs between these approaches: the Doppler estimator offers faster, lightweight updates, while ICP provides higher accuracy at the cost of increased computational load. Second, by controlling the frequency of localization updates and leveraging odometry estimates between them, we demonstrate that accurate localization can be maintained while optimizing for computational efficiency using either odometry method. Our experimental results show that localizing every 10 lidar frames strikes a favourable balance, achieving a localization accuracy below 0.05 meters in translation and below 0.1 degrees in orientation while reducing computational effort by over 30% in an ICP-based pipeline. We quantify the trade-off of accuracy to computational effort using over 100 kilometers of real-world driving data in different on-road environments.

Are Doppler Velocity Measurements Useful for Spinning Radar Odometry?

Apr 02, 2024Abstract:Spinning, frequency-modulated continuous-wave (FMCW) radar has been gaining popularity for autonomous vehicle navigation. The spinning radar is chosen over the more classic automotive `fixed' radar as it is able to capture the full 360 degree field of view without requiring multiple sensors and extensive calibration. However, commercially available spinning radar systems have not previously had the ability to extract radial velocities due to the lack of repeated measurements in the same direction and fundamental hardware setup. A new firmware upgrade now makes it possible to alternate the modulation of the radar signal between azimuths. In this paper, we first present a way to use this alternating modulation to extract radial Doppler velocity measurements from single raw radar intensity scans. We then incorporate these measurements in two different modern odometry pipelines and evaluate them in progressively challenging autonomous driving environments. We show that using Doppler velocity measurements enables our odometry to continue functioning at state-of-the-art even in severely geometrically degenerate environments.

Prepared for the Worst: A Learning-Based Adversarial Attack for Resilience Analysis of the ICP Algorithm

Mar 08, 2024Abstract:This paper presents a novel method to assess the resilience of the Iterative Closest Point (ICP) algorithm via deep-learning-based attacks on lidar point clouds. For safety-critical applications such as autonomous navigation, ensuring the resilience of algorithms prior to deployments is of utmost importance. The ICP algorithm has become the standard for lidar-based localization. However, the pose estimate it produces can be greatly affected by corruption in the measurements. Corruption can arise from a variety of scenarios such as occlusions, adverse weather, or mechanical issues in the sensor. Unfortunately, the complex and iterative nature of ICP makes assessing its resilience to corruption challenging. While there have been efforts to create challenging datasets and develop simulations to evaluate the resilience of ICP empirically, our method focuses on finding the maximum possible ICP pose error using perturbation-based adversarial attacks. The proposed attack induces significant pose errors on ICP and outperforms baselines more than 88% of the time across a wide range of scenarios. As an example application, we demonstrate that our attack can be used to identify areas on a map where ICP is particularly vulnerable to corruption in the measurements.

Pointing the Way: Refining Radar-Lidar Localization Using Learned ICP Weights

Sep 15, 2023Abstract:This paper presents a novel deep-learning-based approach to improve localizing radar measurements against lidar maps. Although the state of the art for localization is matching lidar data to lidar maps, radar has been considered as a promising alternative, as it is potentially more resilient against adverse weather such as precipitation and heavy fog. To make use of existing high-quality lidar maps, while maintaining performance in adverse weather, matching radar data to lidar maps is of interest. However, owing in part to the unique artefacts present in radar measurements, radar-lidar localization has struggled to achieve comparable performance to lidar-lidar systems, preventing it from being viable for autonomous driving. This work builds on an ICP-based radar-lidar localization system by including a learned preprocessing step that weights radar points based on high-level scan information. Combining a proven analytical approach with a learned weight reduces localization errors in radar-lidar ICP results run on real-world autonomous driving data by up to 54.94% in translation and 68.39% in rotation, while maintaining interpretability and robustness.

Toward Certifying Maps for Safe Localization Under Adversarial Corruption

Sep 08, 2023Abstract:In this paper, we propose a way to model the resilience of the Iterative Closest Point (ICP) algorithm in the presence of corrupted measurements. In the context of autonomous vehicles, certifying the safety of the localization process poses a significant challenge. As robots evolve in a complex world, various types of noise can impact the measurements. Conventionally, this noise has been assumed to be distributed according to a zero-mean Gaussian distribution. However, this assumption does not hold in numerous scenarios, including adverse weather conditions, occlusions caused by dynamic obstacles, or long-term changes in the map. In these cases, the measurements are instead affected by a large, deterministic fault. This paper introduces a closed-form formula approximating the highest pose error caused by corrupted measurements using the ICP algorithm. Using this formula, we develop a metric to certify and pinpoint specific regions within the environment where the robot is more vulnerable to localization failures in the presence of faults in the measurements.

Towards Open World NeRF-Based SLAM

Jan 08, 2023

Abstract:Neural Radiance Fields (NeRFs) have taken the machine vision and robotics perception communities by storm and are starting to be applied in robotics applications. NeRFs offer versatility and robustness in map representations for Simultaneous Localization and Mapping. However, computational difficulties of multilayer perceptrons (MLP) have lead to reductions in robustness in the state-of-the-art of NeRF-based SLAM algorithms in order to meet real-time requirements. In this report, we seek to improve accuracy and robustness of NICE-SLAM, a recent NeRF-based SLAM algorithm, by accounting for depth measurement uncertainty and using IMU measurements. Additionally, extend this algorithm by providing a model that can represent backgrounds that are too distant to be modeled by NeRF.

Know What You Don't Know: Consistency in Sliding Window Filtering with Unobservable States Applied to Visual-Inertial SLAM

Dec 13, 2022

Abstract:Estimation algorithms, such as the sliding window filter, produce an estimate and uncertainty of desired states. This task becomes challenging when the problem involves unobservable states. In these situations, it is critical for the algorithm to ``know what it doesn't know'', meaning that it must maintain the unobservable states as unobservable during algorithm deployment. This letter presents general requirements for maintaining consistency in sliding window filters involving unobservable states. The value of these requirements when designing a navigation solution is experimentally shown within the context of visual-inertial SLAM making use of IMU preintegration.

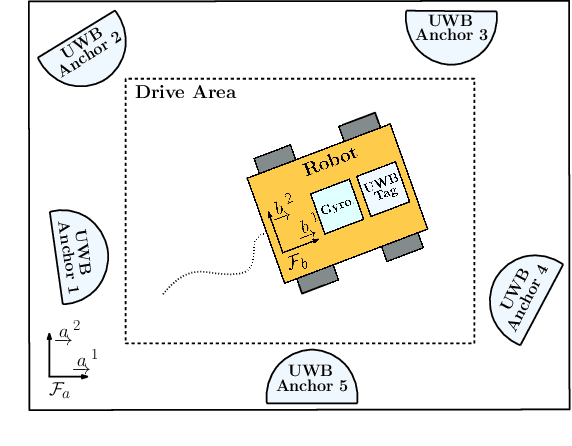

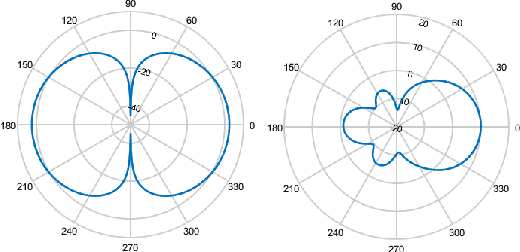

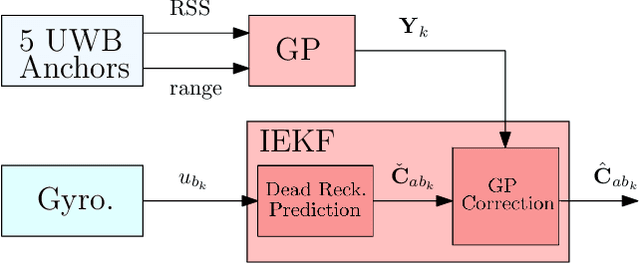

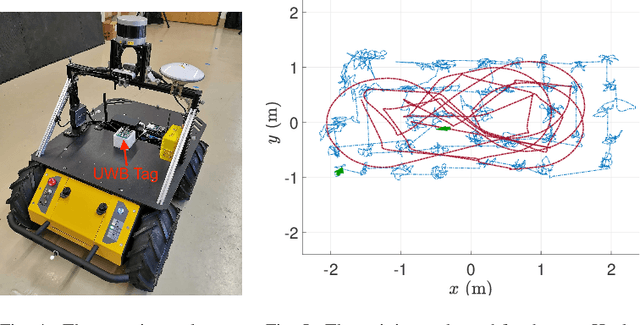

Heading Estimation Using Ultra-Wideband Received Signal Strength and Gaussian Processes

Sep 10, 2021

Abstract:It is essential that a robot has the ability to determine its position and orientation to execute tasks autonomously. Heading estimation is especially challenging in indoor environments where magnetic distortions make magnetometer-based heading estimation difficult. Ultra-wideband (UWB) transceivers are common in indoor localization problems. This letter experimentally demonstrates how to use UWB range and received signal strength (RSS) measurements to estimate robot heading. The RSS of a UWB antenna varies with its orientation. As such, a Gaussian process (GP) is used to learn a data-driven relationship from UWB range and RSS inputs to orientation outputs. Combined with a gyroscope in an invariant extended Kalman filter, this realizes a heading estimation method that uses only UWB and gyroscope measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge